SCIENCE BRINGS NATIONS TOGETHER

11th International Conference "Distributed Computing and Grid Technologies in Science and Education" (GRID'2025)

GRID'2025 will take place on 7-11 July 2025

The International Conference "Distributed Computing and Grid-technologies in Science and Education" will be held at the Meshcheryakov Laboratory of Information Technologies (MLIT) of the Joint Institute for Nuclear Research (JINR). The conference GRID 2025 is dedicated to the 115th anniversary of the birth of M.G. Meshcheryakov (1910-1994) and to the 95th anniversary of the birth of N.N.Govorun (1930-1989), the prominent scientists and the Corresponding Members of the USSR Academy of Sciences. GRID 2025 is also dedicated to the 70th anniversary of the Joint Institute for Nuclear Research and to the 60th anniversary of the Meshcheryakov Laboratory of Information Technologies.

Conference Topics:

1. Distributed Computing Systems, Grid and Cloud Technologies, Storage Systems: architectures, operation, middleware and services.

2. High Performance Computing

3. Application software in HTC and HPC

4. Computing for MegaScience Projects

5. Methods and Technologies for Experimental Data Processing

Conference languages – Russian and English.

Contacts:

| Address: | 141980, Russia, Moscow region, Dubna, Joliot Curie Street, 6 |

| Phone: | (7 496 21) 64019, 64826 |

| E-mail: | grid2025@jinr.ru |

| URL: | http://grid2025.jinr.ru/ |

-

-

09:30

→

10:30

Registration MLIT Conference Hall

MLIT Conference Hall

-

10:30

→

13:00

Plenary MLIT Conference Hall

MLIT Conference Hall

-

10:30

ОИЯИ: международный межправительственный научный центр в Дубне. Наука и Перспективы. 1h

-

Speaker: Dr Grigory Trubnikov (JINR) -

11:30

System programming and technologies for creating trusted systems (including artificial intelligence) 30m

-

Speaker: Arutyun Avetisyan (ISP RAS) -

12:00

Высокопроизводительные вычислительные системы с реконфигурируемой архитектурой 30m

-

Speaker: Igor Kaliaev (NII MVUS) -

12:30

Supercomputing co-design: to know, to be able, to master 30m

-

Speaker: Vladimir Voevodin (Lomonosov Moscow State University, Research Computing Center)

-

10:30

-

13:00

→

14:00

Lunch 1h

-

14:00

→

16:00

Plenary MLIT Conference Hall

MLIT Conference Hall

- 14:00

-

14:30

Распределенные вычисления в ОИЯИ: вчера, сегодня, завтра 30m

-

Speaker: Владимир Кореньков (JINR) -

15:00

Создание национальной сети компьютерных телекоммуникаций для науки и высшей школы 1995-2025 гг 30m

-

Speaker: Vasily Velikhov (NRC "Kurchatov Institute") -

15:30

GRID Didn't Take Off, But Is There a Chance? 30m

The GRID concept, as a computing infrastructure, according to its authors, has not been implemented by 2022 [1]. This concept was proposed by Foster and Kesselman [2] in 1999. It should be noted that this concept best suits the needs of modern applications such as on-demand computing, on-demand data storage, etc. [4]. Here is how the authors themselves formulated the main properties of the GRID infrastructure (cited follow [1]):

1. “[C]ordinate resources that are not subject to central control… (The Grid integrates and coordinates resources and users that are in different control domains—for example, a user’s desktop versus a central computing domain; different administrative units of the same company; or different companies; and solves the problems of security, policy, payment, membership, etc. that arise in these conditions. Otherwise, we are dealing with a local control system.)

2. … use of standard, open, general-purpose protocols and interfaces… (The Grid is built from multi-purpose protocols and interfaces that solve such fundamental problems as authentication, authorization, resource discovery, and resource access. … [I]t is important that these protocols and interfaces be standard and open. Otherwise, we are dealing with an application-specific system.)

3. … provide non-trivial qualities of service. (A Grid allows the use of its constituent resources in a coordinated manner to provide different qualities of service, such as response time, throughput, availability, and security, and/or the coordination of multiple types of resources to meet complex user requirements, so that the combined system provides value that is greater than the sum of its parts.)"

Foster and Kesselman in [1] have stated that none of the numerous attempts to implement the GRID concept have yet been successful. None of the projects listed in this paper have been able to implement the above requirements, to automatically minimize the execution time of an application in a computing infrastructure given its time constraints through efficient automatic management of computing load balancing between available computing devices, "non-trivial quality of service", efficient management of data transfer between application components, and the ability to increase computing resources on demand without limitation [1]. The presentation discusses the reasons for this disappointing conclusion that are computer performance, available data rates, and available mathematical methods for managing computing infrastructure resources in the early 2000s were insufficient to achieve these properties.

However, it has been significant breakthrough in the period 2014 and 2024, the server performance increased by about 7 times, supercomputer performance increased by 4 orders of magnitude, and

the maximum data rate increased from several Tbit/s to 1.1 Pbit/s over the same period [3]. These advances have stimulated a breakthrough in mathematical optimization methods based on machine learning. The point is that, given the above-mentioned speed of computation and data transfer, as well as the fact that network services and application components are easily scalable and operate in real time, to optimize resource management, data flows and computations in the next-generation computing infrastructure, let's call it Computing Centric Network (CCN), control algorithms with low time complexity are required, since time delays in decision-making become critical for the efficient operation of CCN. Classical optimization methods [3] are not suitable for this purpose, since they are based on centralized decision-making, i.e., centralized combinatorial enumeration of solution options is carried out using a (deterministic) algorithm capable of finding the best solution to the problem. In addition to computational complexity, this approach is associated with high overhead costs for collecting, processing and transmitting data between components of the computing infrastructure. The report shows that under these conditions, distributed multi-agent optimization (MAO) methods are the preferred choice. In these methods, the solution to the problem is obtained through the self-organization of a distributed set of algorithm agents capable of competition and/or cooperation and having their own criteria, preferences, and constraints. It is considered the solution is found when, the agents reach consensus (temporary equilibrium or balance of interests).References:

- Foster and. C. Kesselman, “The history of the grid,” arXiv preprint arXiv:2204.04312 (2022).

- I. Foster and. C. Kesselman, “The Grid 2: Blueprint for a New Computing Infrastructure” (Elsevier, Amsterdam, 2003).

- R.Smeliansky, E.Stepanov Machine Learning to Control Network Powered by Computing Infrastructure. ISSN 1064-5624, Doklady Mathematics, 2024. © Pleiades Publishing, Ltd., 2024.

- R. Smeliansky, “Network powered by computing: Next generation of computational infrastructure,” in Edge Computing—Technology, Management and Integration (IntechOpen, 2023), pp. 47–70.

Speaker: Ruslan Smelyanskiy (Moscow State University)

-

16:00

→

16:30

Coffee 30m MLIT Conference Hall

MLIT Conference Hall

-

16:30

→

18:30

Plenary MLIT Conference Hall

MLIT Conference Hall

-

16:30

Organization of resource-intensive computational simulation in real time 30m

The concept of “virtual testbed” of real-time computational simulation is based on high-performance algorithms for modeling the physical phenomenon under study without losing the quality of reproducible processes. One of the most complicated and comprehensive areas of this nature is naval hydrodynamics, as it requires the integration of a sufficiently big number of heterogeneous models.

Application of stable implicit numerical schemes is not considered here, since practice shows uncontrolled smoothing of hydrodynamic processes in subareas with large gradients.

Cross-cutting and ubiquitous analysis of simulation results is allowed when using explicit numerical schemes. They allow hybrid rebuilding of physical models and mathematical algorithms directly in the process of interactive control of computational processes. Nevertheless, unfortunately, often in the formulation of computational simulation follows the conclusion about impossibility of simulation with the given engineering accuracy due to excessive demand of computational resources.The problems of naval hydromechanics in the general formulation are reduced to these insoluble problems, aggravated by the lack of effective models for non-stationary processes of hydromechanics as such. In particular, unsolvable difficulties in naval hydromechanics are caused both by criteria of stability in time and by criteria of approximation smoothness for difference representation and differentiation of high-frequency non-stationary physical fields.

Engineering approaches in naval hydrodynamics (as well as in any other complex technical field) traditionally bring any technical problem to an acceptable result, with adequate correspondence to theoretical, empirical or experimental results. In practice, such explorations are limited to private solutions and are often reduced to the author's “know-how”, which is difficult to generalize and rather risky to apply in case of significant changes in the investigated objects.

Organization of expert systems based on high-performance computing systems allows to synthesize various theoretical approaches with engineering methods in naval hydromechanics. The purpose of such systems is to combine context-optimal modules for modeling, visualization and real-time data analysis.

The paper presents the idea of realizing such system in the current edition of the virtual testbed. Successive set of computational models are built from the simplest kinematic representations to complex modules requiring high-performance computing resources. The experience of specialists from researcher to navigator is formalized in the knowledge base of the expert system in the form of rules. According to these rules, based on the external conditions, the state of the object under study, the mode of motion and modeling objectives, the simplest but sufficient computational modules for adequate simulation of ship dynamics are selected in the distributed computing environment. In the future, they are integrated. It is possible on the basis of the concept of a virtual personal supercomputer.Speaker: Prof. Alexander Degtyarev (Professor) -

17:00

Современный подход к созданию решений для задач HPC, ЦОД, облаков 20m

В докладе будут рассмотрены следующие вопросы:

1. Ключевые технологические тренды HPC

2. Тенденции в области развития платформ для HPC, AI/ML, Big Data, классических ЦОД

3. Альтернативная энергоэффективная платформа для HPC, AI/ML, Big Data

4. Универсальный модульный сервер «М1»

5. Варианты исполнения сервера «М1»

6. Применимость «М1» в HPC, AI/ML, Big Data, классических ЦОД

7. Сравнение производительности в задачах HPC, AI/ML, Big DataSpeaker: Sergey Plyusnin ("E-Flops" LLC) -

17:20

Govorun supercomputer for JINR tasks 20m

-

Speaker: Дмитрий Подгайный (JINR) -

17:40

Аппаратно-программные решения РСК на примере расширения СК Говорун 2024-2025 гг 20m

-

Speaker: Alexander Moskovsky

-

16:30

-

18:30

→

19:30

Welcome Party 1h MLIT Conference Hall

MLIT Conference Hall

-

09:30

→

10:30

-

-

09:00

→

11:00

Plenary MLIT Conference Hall

MLIT Conference Hall

-

09:00

Software complex for distributed data processing during Run 9 of the BM@N experiment 30m

Modern physics experiments on particle collisions cannot operate without sophisticated software used at all stages of the work, including the design of the setup, control of the operation of different subsystems, data collection, online and offline processing and final physics analysis. This also holds true for the fixed target experiment, BM@N, the first experiment operating and taking data at the NICA complex in JINR. Since 2015, eight BM@N Runs, including the latest, physics one, have been conducted, and Run 9 with xenon ion beams is scheduled in the coming months. The report presents a set of software systems and services developed to automate BM@N data processing and storing on distributed hardware platforms, and some manual operated procedures during Run 9 of the experiment. Computing software as well as a complex of information systems providing information necessary for event data processing will be discussed. Both newly implemented software of the experiment and proven solutions used in recent BM@N Runs but constantly evolving will be demonstrated. Furthermore, a set of additional, but essential services will be noted, for instance, event daily (and integral) statistics service, which collects and visualizes event distributions divided into days by various parameters.

Speaker: Konstantin Gertsenberger (JINR) -

09:30

Full-scale simulation of the MPD-NICA experimental setup and data analysis techniques 30m

The Multi-Purpose Detector (MPD) is one of the three experiments of the Nuclotron Ion Collider-fAcility (NICA) complex, which is currently under construction at the Joint Institute for Nuclear Research in Dubna. With collisions of heavy ions in the collider mode, the MPD will cover the energy range 4-11 GeV to scan the high baryon-density region of the QCD phase

diagram. With expected statistics of 50-100 million events collected during the first run, MPD will be able to study a number of observables, including measurements of light hadrons and hypernuclei production, particle flow, correlations and fluctuations.

We will present selected results of the full-scale simulation of the MPD-NICA experimental setup for and discuss the data analysis techniques.Speaker: Arkadiy Taranenko (VBLHEP JINR) -

10:00

The SPD Software & Computing project 30m

The Spin Physics Detector (SPD) collaboration is developing a universal detector to be installed at the second interaction point of the Nuclotron-based Ion Collider fAcility (NICA). Along with establishment of facility, refinement of the physical research program, growing up needs in data processing. To address these needs and provide essential IT services, the SPD Software & Computing (S&C) project was launched.

Key Objectives of the Project:

- Develop reliable, efficient, and maintainable software for experimental data processing.

- Establish a sustainable data processing system for both online and offline operations.

- Ensure robust and efficient storage of experimental data.

- Implement SPD-specific information systems and services.This presentation outlines the general structure of the SPD S&C project and highlights the key IT challenges being addressed for the SPD experiment.

Speaker: Dr Danila Oleynik (JINR MLIT) - 10:30

-

09:00

-

11:00

→

11:30

Coffee 30m MLIT Conference Hall

MLIT Conference Hall

-

11:30

→

13:00

Plenary MLIT COnference Hall

MLIT COnference Hall

-

11:30

JUNO distributed computing system 30m

The Jiangmen Underground Neutrino Observatory (JUNO) is an international neutrino experiment and the determination of the neutrino mass hierarchy is its primary physics goal. JUNO established distributed computing system to organize resources for JUNO data processing activities. JUNO is in the commissioning phase now and plans to take data on July, 2025 with 3PB raw data each year. PBs of massive MC data has been generated among JUNO data centers through this system. More than 1PB commissioning data has been transferred successfully to remote data centers in time. The paper will give an overview of the system and describe the preparations for the coming data-taking.

Speakers: Dr Xiaomei Zhang, Dr Xuantong Zhang -

12:00

Distributed computing status at IHEP, CAS 30m

The Institute of High Energy Physics (IHEP), Chinese Academy of Sciences (CAS), employs distributed computing systems to coordinate computing and storage resources across multiple international collaborations. Among these, the Jiangmen Underground Neutrino Observatory (JUNO) is a multipurpose neutrino experiment scheduled to begin official data acquisition in the second half of 2025. The JUNO distributed computing system, based on DIRAC, will handle the annual distribution of 2.4 PB of raw data and 0.6 PB of processed data, with tasks distributed across computing and storage facilities at IHEP, JINR, MSU, CNAF, IN2P3, and other partner institutions. Additionally, the High Energy cosmic-Radiation Detection (HERD) experiment, an upcoming space astronomy and particle astrophysics mission, is set to launch aboard China’s space station in 2027. HERD will also adopt a distributed computing architecture, using Rucio and DIRAC to generate and distribute approximately 90 PB of data over its 10-year mission. Data processing will be shared between Chinese and European computing sites. This report presents the research and applications of distributed computing systems in JUNO, HERD, and potential future IHEP-supported experiments. Key topics include distributed computing frameworks, grid middleware, and customized production services developed to meet experimental requirements.

Speaker: Xuantong Zhang (Institute of High Energy Physics, Chinese Academy of Sciences) -

12:30

DIRAC status and further evolution 30m

-

Speaker: Andrei Tsaregorodtsev (CPPM-IN2P3-CNRS)

-

11:30

-

13:00

→

14:00

Lunch 1h

-

14:00

→

16:00

Application software in HTC and HPC Room 406

Room 406

-

14:00

Развитие системы мониторинга вычислительных ресурсов Гетерогенной платформы HybriLIT 15m

В докладе представлен обзор систем мониторинга различных компонент Гетерогенной платформы HybriLIT. Сформулированы цели и назначение применяемых систем, являющихся одним из важных инструментов системного администрирования платформы.

Для контроля за состоянием вычислительных ресурсов применяется разработанная ранее система мониторинга, которая позволяет в реальном времени отслеживать загрузку CPU и GPU компонентов вычислительного узла, использование оперативной памяти и систем хранения данных, объём сетевого трафика и т.п.

В докладе представлена новая система мониторинга, являющаяся логическим развитием разработанной ранее системы, обеспечивающая ряд дополнительных функций для контроля за состоянием вычислительных ресурсов платформы.Speaker: Дмитрий Беляков (MLIT JINR) -

14:15

Разработка системы аккаунтинга и обработки статистики использования вычислительных ресурсов суперкомпьютера «Говорун» 15m

В докладе рассматривается разработка системы аккаунтинга и обработки статистики использования вычислительных ресурсов суперкомпьютера «Говорун». Основное внимание уделено методам обработки и визуализации статистических данных, позволяющим оценивать эффективность использования вычислительных ресурсов и определять дальнейшие задачи по администрированию для оптимизации работы пользователей на суперкомпьютере.

Для решения задачи в качестве одного из компонентов системы была использована платформа Yandex DataLens — система бизнес-аналитики с открытым исходным кодом. Разработанная система формирует и визуализирует заранее подготовленные статистические данные по использованию вычислительных ресурсов в виде круговых диаграмм и сводных таблиц.

В докладе представлены структура системы, функциональные возможности и примеры практического применения. Система позволяет оценивать вклад различных групп пользователей в процентном отношении по ключевым метрикам, таким как количество счётных задач и общее количество затраченных ядро-часов.Speaker: Мария Любимова -

14:30

Экосистема ML/DL/HPC для прикладных исследований 15m

В докладе будет представлено описание структуры и характеристик экосистемы ML/DL/HPC, построенной на базе многопользовательской среды разработки JupyterLab. Приведен обзор используемых технологических решений и решаемых задач.

Первая задача: инструментарий для публикации файлов Jupyter Notebook в формате электронных публикаций Jupyter Book для задач моделирования гибридных наноструктур сверхпроводник/магнетик (совместный проект ЛИТ и ЛТФ). Подготовленные материалы позволяют проводить учебные курсы и мастер-классы для пользователей, сотрудников ОИЯИ и студентов.

Вторая задача: сервисы для анализа траекторий мелких лабораторных животных в поведенческом тесте «Водный лабиринт Морииса» и веб-сервис для детекции и анализа радиационно-индуцированных фокусов (в рамках совместного проекта ЛИТ и ЛРБ).

Третья задача: полигон для квантовых вычислений, на котором установлен ряд квантовых симуляторов, в том числе для работы с квантовыми нейронными сетями.Speaker: Mr Максим Зуев (MLIT JINR) -

14:45

Сравнительный анализ эффективности параллельных вычислений на CPU и GPU для расчёта физических характеристик сверхпроводниковых квантовых интерференционных устройств 15m

В рамках совместного проекта ЛИТ и ЛТФ ОИЯИ развивается инструментарий для исследования систем, основанных на джозефсоновских переходах на базе Jupyter с использованием Python-библиотек. Разработанные алгоритмы размещаются в Jupyter Book, который дает возможность также добавлять реализованные модели, следить за всеми этапами математического моделирования и реализация вычислительных схем. Отметим, что ряд задач требует проведения многочисленных ресурсоемких расчетов, что приводит к необходимости существенного ускорения вычислительных схем, реализованных на Python.

В докладе представлены результаты сравнительного анализа параллельных вычислений проводимых на CPU и GPU с использованием библиотеки Numba JIT-компилятор (Just-In-Time) для языка программирования Python, на примере моделирования физических характеристик сверхпроводникового квантового интерферометра с двумя джозефсоновскими переходами (superconducting quantum interference device ¬– SQUID, СКВИД).

Вычисления проводятся на базе экосистемы ML/DL/HPC Гетерогенной платформы HybriLIT (ЛИТ ОИЯИ). Работа выполнена при поддержки РНФ в рамках проекта № 22-71-10022.

Ключевые слова: Python, математическое моделирование, джозефсоновские переходы, параллельные вычисления.Speaker: Mrs Adiba Rahmonova (Joint Institute for Nuclear Research) -

15:00

Data Storage Redundancy: the Two Matrix Toolkits for Failed Device Reconstruction 15m

The network storage systems are treated, organized into several groups of devices with data redundancy capability.

We consider two schemes for managing data reconstruction process, namely the

declustered Redundant Array of Independent Disks (RAID) technique and, more generally, the Reed-Solomon (RS) error correction

coding within the framework of the Welch-Berlekamp algorithm.

These approaches essentially exploit the properties of circulant or Hankel matrices.The reconstruction of all the units in the failed device causes a certain read/write load onto the survived devices. One of the major requirements to the storage device array is to manage the chunk group distribution across devices to produce a balanced read/write load on survived devices regardless of the location of the failed device. Construction of the data layout

with the aid of an appropriate circulant matrix provides one with such an opportunity. This surprisingly relates to the classical Graph Coloring problem.For the problem of the error locator polynomial construction in the RS-coding, we propose an effective algorithm for recursive computation of the potential candidates in the form of Hankel polynomials.

Speaker: Alexei Yu. Uteshev (St.Petersburg State University) -

15:15

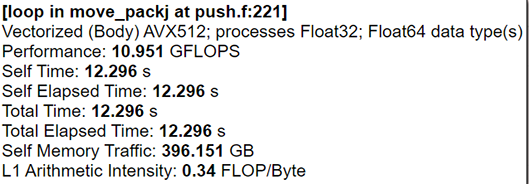

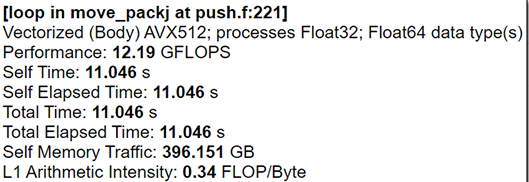

AVX-512 Optimization of Plasma Physics Solver 15m

Particle-in-cell (PIC) numerical simulations are widely used for the numerical modeling of plasma physics problems. These simulations are used primarily, but not exclusively, to study the kinetic behavior of the particles. For example, we used this method for numerical simulations of physics in linear particle accelerators [1,2].

The main idea of the method is to describe the plasma as a set of electrons and ions, which are modeled as discrete entities that move in continuous fields that are calculated on a computational mesh. The calculation of the motion of each particle is defined by electromagnetic fields. In our case, we use our modification of the finite-difference time-domain (FDTD) method as a discretization technique. A de-tailed description of the numerical method and scheme parallelization can be found in 3.

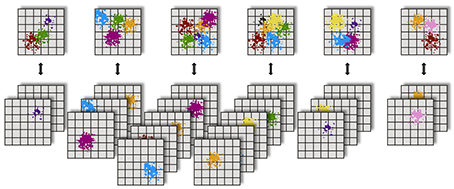

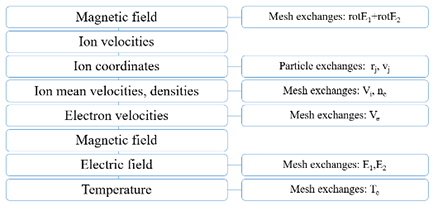

Figure 1 and 2 are briefly shows particle-domain decomposition and block-scheme of the algorithm for a one-time step. In our code, we apply a hybrid decomposition, divid-ing the computational domain along the z-axis into subdomains and assigning a group of processes to each subdomain. Within each group, particles are distributed almost uniformly: this uniformity is ensured by the even distribution of particles at the start, during the injection, and the exchanges by approximately equal quantities of particles with random processes of neighboring groups. A master processor of each group provides 3D arrays of the electromagnetic fields to his group and then gathers 3D arrays of the densities and mean velocities. During the Eulerian stage, the computations and the corresponding data exchanges with the ghost node values be-tween neighbor processes are performed only by the master group processes.

2 Vectorization

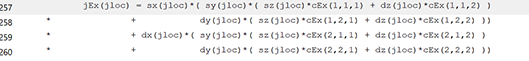

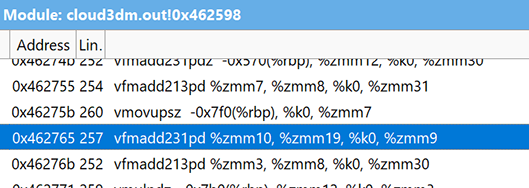

In our previous works 4, we used AVX512 intrinsics for numerical algorithm realization. However, the result of this approach is architecture-dependent code. At this moment, we are writing C++ or Fortran codes with different tricks that help the compiler build effective AVX512 code. In the case of our plasma physics solver, we have two of the “heaviest” functions which calculate particle data and density. For the autovectorization, we changed our mathematical expressions to ax+bx+c view. All division operations have been moved to separate operations. This approach helps the compiler to build FMA instructions using ZMM AVX-512 registers (see Fig.3 and Fig.4).

We also need to find data size parameters and particle pack size for better per-formance because of the specific particle-domain decomposition. Our tests showed that particle pack size can speed up particle data calculations function by up to 16% (see Fig.5 and Fig. 6) on the same data set.

Acknowledgments. This work was supported by the Russian Science Foundation (project 19-71-20026).

References

1. Chernykh, I., Kulikov, I., Vshivkov, V., Genrikh, E., Weins, D., Dudnikova, G., Cher-noshtanov, I., Boronina, M.: Energy Efficiency of a New Parallel PIC Code for Numeri-cal Simulation of Plasma Dynamics in Open Trap. Mathematics, 10, 3684 (2022).

2. Chernoshtanov, I.S., Chernykh, I.G., Dudnikova, G.I., Boronina M.A., Liseykina, T.V., Vshivkov, V.A. Effects observed in numerical simulation of high-beta plasma with hot ions in an axisymmetric mirror machine. Journal of Plasma Physics, 90, 2, 905900211 (2024).

3. Boronina, M.A., Chernoshtanov, I.S., Chernykh, I.G. et al: Three-Dimensional Model for Numerical Simulation of Beam-Plasma Dynamics in Open Magnetic Trap. Loba-chevskii J Math 45, 1–11. doi:10.1134/S1995080224010074 (2024)

4. Glinsky B., Kulikov I., Chernykh I., Weins D., Snytnikov A., Nenashev V., Andreev A., Egunov V., Kharkov E. The Co-design of Astrophysical Code for Massively Parallel Supercomputers. Lecture Notes in Computer Science, 10049, pp.342-353. DOI: 10.1007/978-3-319-49956-7_27 (2016)Speaker: Igor Chernykh (Institute of Computational Mathematics and Mathematical Geophysics SB RAS) -

15:30

Parallel Particle-in-Cell based numerical model for the study of terahertz emission from laser-ionized gas targets 15m

A parallel numerical model for studying the generation of intense terahertz radiation in the interaction of bichromatic infrared laser pulses with a neutral gas and the results of simulations performed on its basis are presented. A fully kinetic model consisting of the Vlasov equations for the plasma distribution functions and Maxwell equations for the self-consistent electromagnetic field is used to simulate the macroscopic response of the plasma generated during ionization of a gas target by a laser field. Field ionization is taken into account within the framework of the cascade mechanism [1]. The numerical code is based on the Particle-in-Cell method and uses a finite difference time domain scheme (FDTD) for electromagnetic fields [2], a Boris pusher to update the positions and velocities of particles, and a charge conservation method [3] to fulfill Gauss's law for the electric field. The energy losses associated with ionization are accounted for by introducing an ionization current. Parallelization is achieved by domain decomposition. The distribution of numerical data between processing units and mutual exchanges are handled by MPI subroutines.

The work was supported by Russian Science Foundation within grant No. 24-21-00037. Numerical simulations were performed at the Joint Supercomputer Center of the Russian Academy of Sciences and at the Siberian Supercomputer Center of the Siberian Branch of the Russian Academy of Sciences.

References

1. V.S. Popov, "Tunnel and multiphoton ionization of atoms and ions in a strong laser field (Keldysh theory)", Phys. Usp. 47, N. 9, 855-885 (2004)

2. A. Taflove, S. C. Hagness, "Computational Electrodynamics: The Finite-Difference Time-Domain Method", 2nd ed. (2000), Chap. 5.8

3. T. Zh. Esirkepov, "Exact charge conservation scheme for Particle-in-Cell simulation with an arbitrary form-factor", Comp. Phys. Comm. 135 (2), 144–153 (2001).Speaker: Dr Татьяна Лисейкина (ИВМиМГ СОРАН) -

15:45

Полигон для визуализации данных компьютерной томографии головного мозга 15m

На ресурсах экосистемы ML/DL/HPC Гетерогенной платформы HybriLIT разворачивается полигон для визуализации данных компьютерной томографии головного мозга. Полигон позволит строить изображения 3D объектов с использованием программных обеспечений для обработки, анализа и визуализации человеческого мозга. В дальнейшей перспективе полигон позволит внедрить новый математический аппарат улучшения качества полученных данных.

Speaker: Анастасия Аникина (MLIT, JINR)

-

14:00

-

14:00

→

16:00

Computing for MegaScience Projects Room 420

Room 420

-

14:00

UNAM-JINR network connectivity status 15m

One of the key elements we must verify before joining a Grid infrastructure is network connectivity. A high bandwidth is required to move a large volume of data, and this must be accompanied by optimized routing.

Based on our previous experience with the Grid in ALICE (WLCG), AUGER (EGI), and EELA (Europe-Latin America), optimizing the path is challenging when Grid resources are distributed across several countries, and the National Research and Education Networks (NRENs) must adjust the routes accordingly.

For NICA, we have the support of CUDI (the Mexican NREN). We are working with them to resolve asymmetric routing and enhance connectivity to JINR. Additionally, with the support of CENIC in California, USA, we plan to explore connectivity to JINR through the Pacific Wave link.

Finally, with the added network traffic from several international collaborations and the hardware upgrade between CUDI and CENIC, we

anticipate a network bandwidth upgrade for UNAM shortly.Speaker: Luciano Diaz (ICN-UNAM) -

14:15

New design of tools for accessing the ATLAS CREST conditions database in Athena 15m

Athena is the ATLAS software framework that manages nearly all ATLAS production workflows. Most of these workflows rely on accessing data in the conditions database. CREST is a new conditions database project designed for production use in Run 4. Its primary goals are to evolve the data storage architecture, optimize access to conditions data, and enhance caching capabilities within the ATLAS distributed computing infrastructure. During the development of the CREST prototype, a new tool for interacting with the conditions database was integrated into Athena. Initially, this tool was based on the existing COOL implementation, enabling rapid testing of the new database in production workflows. However, due to maintenance challenges and the tool’s limited accommodation of CREST-specific features, a decision was made to redesign it. This article describes the new design for accessing CREST data from Athena. The redesigned toolkit simplifies maintenance, consolidates numerous metadata handling methods into a single class, and introduces a class for serializing and deserializing CREST data. This approach supports flexible handling of various data storage formats in CREST.

Speaker: Mr Evgeny Alexandrov (JINR) -

14:30

SPD offline computing software architecture and current status 15m

The SPD (Spin Physics Detector) facility at the NICA accelerator complex at JINR is under construction. In addition to the physics facility itself, the software for the future experiment is also being developed. There is already a constant demand for sufficiently large-scale data productions to simulate physical processes in a future experiment. To facilitate their implementation, MLIT staff are developing a set of systems and services that allow for the orderly storage and processing of experimental data both on JINR resources and on the resources of the institutes that are members of the SPD collaboration and, in common, forming a distributed computing environment of the experiment. The distributed computing environment is in trial operation, but it is already running full fledged productions based on requests from physics groups. Over the past six months, the system has modeled more than 1 billion physical events and generated more than 200 TB of data. An overview of the recent developments in the SPD offline software is presented in this talk.

Speaker: Artem Petrosyan (JINR) -

14:45

SPD data management 15m

Active work continues on the creation of the SPD (Spin Physics Detector) facility at the NICA accelerator complex, which is located at the Joint Institute for Nuclear Research (JINR). Since the facility will collect a large amount of data, data processing and storage will be carried out in a distributed computing environment. In this regard, there is a need for specialized software for effective data management.

At the current stage of research, significant amounts of data have already been accumulated, and the Rucio system is used as a management tool, a standard solution for data management in the field of high energy physics. The report will present the experience of putting Rucio into operation for the SPD experiment. Integration with other services, development of additional utilities, automation of work processes and development of internal monitoring will also be considered.Speaker: Alexey Konak (JINR) -

15:00

Sampo: software platform for SPD data processing 15m

The Spin Physics Detector is a universal detector to be installed in the second interaction point of the NICA collider to study the spin structure of the proton and deuteron. Each large HEP experiment needs it's own applied software for handling generation, simulation, reconstruction and physics analysis tasks. Due to the commonality of such tasks among different experiments dedicated libraries and frameworks were developed. Gaudi is one of such physics frameworks which proved it's reliability and convenience by being used by many collaborations. Sampo is a Gaudi-based program platform which is now under development to serve the needs of SPD collaboration. Sampo is tended to replace the current SPD applied software SpdRoot.

Speaker: Лев Симбирятин (JINR) -

15:15

Распределённая параллельная файловая система Lustre для обработки и анализа данных мегасайенс-проекта NICA 15m

В рамках доклада предложено решение на основе распределенной параллельной файловой системы Lustre для быстрого копирования данных и выполнения расчётов на СК Говорун и вычислительном кластере NCX, включающее режим отказоустойчивости на основе компонент Lustre и программных пакетов Pacemaker/Corosync. Разработанная архитектура построена на основе современного серверного оборудования с высокоскоростным подключением к сети, и имеющее географическое размещение в разных зданиях института. Приведены результаты тестирования производительности распределённой параллельной файловой системы Lustre, полученные на основе работы программной утилиты IOR, работающей на основе технологии MPI, а также работы счётных задач пользователей.

Speaker: Aleksander Kokorev -

15:30

Information Systems for the SPD Experiment 15m

The SPD experiment will have to collect large amount of data: up to trillion events (records of a collision results) will have to be stored and analyzed, producing around ten petabytes yearly. A similar amount of simulated particle collisions for use in detector data analysis will be produced. This information will be distributed between a number of computing sites on a various storage locations, with duplication to avoid data loss and improve performance. The processing of the experimental data requires a wide variety of auxiliary information from many systems. To effectively access and handle all this data, as well as to operate detector itself, a number of information systems (IS) have to be created. A catalog of hardware components that SPD detector (Hardware database) is being developed, to provide information necessary in detector maintenance, data acquisition and processing. To support ongoing production of simulated data, a number of registries will be developed, including production registry, software version registry, geometry and magnetic field map registries. A catalog of hardware components that SPD detector (Hardware database) is being developed, to provide information necessary in detector maintenance, data acquisition and processing. With the development of the software framework and data model a catalog of the SPD physics events (Event Index) will be created to help to search and acess event data in the distributed storage system.

Speaker: Dr Федор Прокошин (JINR) -

15:45

Design of the BM@N experiment data management system 15m

The Data Management System (DMS) design for BM@N, a fixed target experiment of the NICA (Nuclotron-based Ion Collider fAcility) is presented in this article. The BM@N DMS is based on the DIRAC Grid Community. This system provides all the necessary tools for secure access to the experiment data. The key service of the system is the File catalog, presenting all the distributed storage elements as a single entity for the users with transparent access. The file catalog also includes a metadata catalog, it can be used for an efficient search of the data necessary for a particular analysis. Access is provided via a REST API and a C++ interface, with authentication via BM@N SSO. The REST API helps to integrate the DMS with other software systems of the experiment, while the C++ interface allow BmnRoot to conveniently select events for a particular physics analysis.

Speaker: Igor Zhironkin (Sergeevich)

-

14:00

-

14:00

→

16:00

Distributed Computing Systems, Grid and Cloud Technologies, Storage Systems MLIT Conference Hall

MLIT Conference Hall

-

14:00

Distributed computing infrastructure for the SPD experiment 15m

The SPD experiment at the NICA collider involves not only the processing of multiple petabytes of data per year obtained from the detector, but also the production of similar amounts of data as part of the modeling of physical processes and expected signals from the front-end electronics. Because of this, the SPD experiment relies heavily on distributed computing for offline data storage and processing. In this talk we present preliminary steps to build a distributed computing infrastructure for the SPD experiment, including the network backbone, storage and computing facilities at participating parties, their software components and configuration along with some higher-level software components necessary for the smooth operation of such infrastructure.

Speaker: Mr Andrey Kiryanov (PNPI of NRC KI) -

14:15

INP BSU site: status update 15m

Status of the INP BSU grid site presented. The experience of operation, efficience, flexibility of the cloud based structure is discussed.

Speaker: Dmitry Yermak (Institute for Nuclear Problems of Belarusian State University) -

14:30

Using the StackStorm automation engine for workflow orchestration in the complex Linux-based production environment of the computing center at NRC “Kurchatov Institute” – IHEP 15m

Managing a complex Linux-based production environment of the computing center is a highly challenging operational task. Such tasks require a high level of automation in distributed multi-component systems, which must be applied to complex operational workflows. There are several approaches to achieve this goal, including: creating operational scripts based on Linux shell commands and programming languages, using specialized software for specific tasks (backups, configuration, management) or employing advanced orchestration tools. Among these solutions, the StackStorm automation engine stands out. This paper describes the use of this platform for orchestrating operational workflows such as distributed backups, distributed system upgrades and distributed system administration in the Linux-based computing center at NRC «Kurchatov Institute» – IHEP.

Speaker: Anna Kotliar (IHEP) -

14:45

Development of a knowledge base system for administration of the NRC 'Kurchatov Institute' – IHEP computing center based on Linux history tools 15m

Computer administration of complex computing systems and distributed computing clusters presents significant challenges. Each sophisticated computer system has unique architecture and software configurations, it is custom-built and it is optimized for specific operational requirements and support conditions. Long-term system administration of such environments includes daily execution of various server commands - both for troubleshooting and for monitoring system behavior under different operational loads. These accumulated commands can be transformed into a valuable knowledge base for future use. This paper describes the development of such knowledge management system using Linux history tools at NRC “Kurchatov Institute” – IHEP

Speaker: Maria Shemeiko (Institute for High Energy Physics named by A.A. Logunov of National Research Center “Kurchatov Institute”) -

15:00

JUNO Distributed Computing System Monitoring 15m

The Jiangmen Underground Neutrino Observatory (JUNO) is a major international neutrino experiment located in Kaiping City, Guangdong Province, southern China. To support its large-scale data processing needs, JUNO has adopted a distributed computing model based on the Worldwide LHC Computing Grid (WLCG) architecture. The JUNO distributed computing infrastructure includes collaborative sites from China, Italy, France, and Russia.

To ensure the stability, efficiency, and accountability of this international computing network, we have developed a monitoring system tailored for JUNO’s distributed computing environment. This system is designed to continuously track the operational status of computing sites and core services, as well as to account for cumulative resource usage across all participating centers. Leveraging a dedicated workflow management tool, it executes site-level Service Availability Monitoring (SAM) tests and aggregates diagnostic and performance metrics.

Currently, the system provides real-time data collection and interactive visualization capabilities across several critical areas, including site availability and reliability, data transfer performance, computing and storage resource statistics, and the status of essential grid and cloud services. This monitoring framework provides essential support for the daily operation and performance analysis of JUNO’s distributed computing system.

Speaker: Xiao Han (Institute of High Energy Physics, CAS) -

15:15

Мониторинг и автоматизация управления инженерной инфраструктуры Лаборатории Информационных Технологий им.М.Г.Мещерякова. 15m

TBA

Speaker: Станислав Паржицкий (ОИЯИ) -

15:30

Federated Analytics and Agents Architecture 15m

Federated data analysis is a technology for building distributed data analysis systems where no data is moved from their storage (collection) locations for analysis. This is a data analysis technology that defines a new level of access and transfers analysis and calculations directly to where the data is located. The World Economic Forum notes that, in fact, 97% of collected healthcare data is unused – it cannot be directly downloaded (uploaded to external sites), and there are no tools for federated analysis. The vast amount of data produced by healthcare institutions around the world remains underutilized. The state of affairs with research data is likely even worse. Federated data analysis allows researchers to safely analyze data from different organizations. Restrictions on data access, cybersecurity requirements, and the development of edge device intelligence are all factors that will ensure growing interest in this technology. The paper examines issues of designing the architecture of such systems.

Speaker: Dmitry Namiot -

15:45

Multi-agent Traffic Load Balancing by Agents with Two-Layer Control Plane 15m

This study addresses traffic load balancing (TLB) problem in Computing Centric Network (CCN) – an open, software-defined virtualized infrastructure that integrates distributed computing with high-speed data networks (DTN). Distributed TLB methods based on Multi-agent reinforcement learning (MARL) are quite perspective due to faster decision making and its adaptability to dynamic network traffic fluctuations. Despite the existing approaches such as Multi-agent routing using Hashing method (MAROH) showed better results than traditional approaches like ECMP and UCMP, and comparable results to centralized method, there are still too many inter-agent communications that slow down decision making and degrade channel bandwidth utilization efficiency.

Our key contribution is a two-layer MARL control plane, where agents may act based on its previous experience, stored in local memory, or communicate to make coordinated action. The proposed approach was implemented as an enhancement for MAROH. Experiment results showed that this approach reduces inter-agent communications by 80% while improving the objective function (sum of deviations from average link utilization) by 30%.Speaker: Evgeniy Stepanov (Lomonosov Moscow State University)

-

14:00

-

14:00

→

16:00

Methods and Technologies for Experimental Data Processing Room 310

Room 310

-

14:00

Muon Shield optimization for SHiP experiment as HTC MC task 15m

SHiP (Search for Hidden Particles) is a new general-purpose experiment at the SPS ring at CERN, aimed at searching for hidden particles proposed by numerous theories beyond the Standard

Model. An important element of the experiment is muon shield. On one hand, it must provide good background suppression, and on the other hand, it should not be too heavy. The Muon shield configurations was obtained using Bayesian optimization with several types of surrogates. This allowed for effective global multidimensional optimization in a 42-dimensional space and reduced the muon flux by 2.5 times while maintaining the original mass of the shield. A large number of MC Geant simulation tasks were performed on the Yandex Cloud Kubernetis cluster. The paper presents our ideas and approaches that we used to reduce the amount of computation while keeping the accuracy at an acceptable level.Speaker: Евгений Курбатов -

14:15

Implementing the universal framework for analysis of anisotropic flow for MPD and BM@N 15m

The momentum anisotropy of particles produced in heavy-ion collisions serves as a sensitive probe of the matter formed in the collision overlap region. While detector effects can significantly distort the measured values of this observable, techniques exist to correct for acceptance non-uniformities and non-flow correlations. Developing an experiment-independent framework for anisotropic flow measurements can greatly simplify the process of obtaining robust physical estimates. We present QnTools, a universal software package designed for analyzing flow and polarization of particles produced in collisions. We demonstrate its application in extracting directed flow in the BM@N experiment and evaluating the performance of the MPD experiment for anisotropic flow measurements.

Speaker: Mikhail Mamaev (NRNU MEPhI) -

14:30

Implementation of ACTS-based track reconstruction for the forward detector in the MPD experiment at NICA 15m

Multi-Purpose Detector (MPD) is aimed at the extensive investigation of

the properties of dense QCD matter created in heavy ion collisions. The

forward tracking detector would extend available rapidity range from

$|y| < 1.2$ to $|y| < 2.5$, which is critical for the studies of various

observables that can be used to probe the properties of the produced

matter. The main challenges for the detector are the momentum resolution

limited by the radial distance available for the track curvature

measurement that is strongly reduced at high pseudorapidities, large

material budget in front of the detector and high occupancy expected in

central heavy ion collisions.ACTS (A Common Tracking Software) is extensively used for the forward

detector design developments. It provides a set of

experiment-independent tools for particle track reconstruction,

implemented with modern software concepts. The set includes the Kalman

filter for track fitting, seeding tools and combinatorial Kalman filter

for track finding. Coupled with an independent geometry description,

these algorithms can be adapted to various detector types.Performance of ACTS-based implementation of track reconstruction was

tested both in simplified and realistic environments in terms of event

multiplicity. In this report, we will discuss the results of the forward

tracker performance studies.Speaker: Evgeny Kryshen (JINR, PNPI) -

14:45

Data Shift Problem in Machine Learning for Particle Identification 15m

Particle identification (PID) is an essential step in the data analysis workflow of high-energy physics experiments. Machine learning approaches have become widely used in high-energy physics problems in general, and in PID in particular for the last ten years. Due to the fact that conventional algorithms of PID have poor performance in the high momentum range. However, due to the absence of ground-truth labels in experimental data, classifiers must be trained on Monte Carlo (MC) simulations. This creates a fundamental challenge: differences between the simulated and real data distributions known as data shift. It can significantly affect model generalization and performance. The impact of data shift was explored by comparing particle classification results across several MC datasets generated with different simulation settings. How the distributions of key features (momentum, energy, velocity, mass squared) vary between simulations was analyzed. The results highlight the need to carefully validate and adapt machine learning models to ensure reliable performance on data with potentially shifted distributions, especially in scenarios where real labels are unavailable.

Speaker: Vladimir Papoyan (JINR & AANL) -

15:00

Logistic regression method for particle identification in MPD experiment 15m

This work shows the application of logistic regression model for particle identification task in Multi Purpose Detector (MPD) experiment on Nuclotron based Ion Collider fAcility (NICA) at Joint Institute for Nuclear Research. The model has been tried on Monte-Carlo dataset provided by MPDRoot software package and compared against n-sigma method, included in MPDRoot, and XGBoost gradient boosted decision tree method, previously investigated. Feature importance analysis was conducted to explore the possibility to decrease model size and increase computational speed.

Speaker: Danila Starikov (RUDN University) -

15:15

Effective numerical-analytical method for modeling the dynamics of a fuel cell system for a pulsed-type reactor 15m

Modeling the dynamics of a dissipative system of interacting fuel elements of the new-generation NEPTUN pulse reactor is considered from the standpoint of Hamiltonian formalism. An exact analytical expression is obtained within the “zero” approximation, it describes the evolution of the phase portraits of the system and allows for an efficient numerical implementation on the architecture of GPU graphics processors.

The algorithm enables to find natural frequencies and oscillation modes, as well as to optimize system parameters to assess the stability of the reactor operation.Speaker: Mikhail Klimenko -

15:30

GAN-based simulation of microstrip triple GEM detector in the BM@N experiment 15m

The triple GEM detector is one of the basic components of the hybrid tracking system in the BM@N experiment. It consists of gas chambers located along the beam axis, designed to register particles passing through matter in the form of responses on a microstrip readout plane. The presented work describes the features of detector response simulation and considers a method for this simulation using Generative-Adversarial Networks (GAN). A comparative analysis is provided between the proposed generative model and a previously developed parametric signal generation method. Particular attention is paid to data preparation for network training, as well as the formation of feature vectors for Conditional GAN (C-GAN).

Speaker: Mr Дмитрий Баранов (JINR)

-

14:00

-

16:00

→

16:30

Coffee 30m MLIT Conference Hall

MLIT Conference Hall

-

16:30

→

18:30

Application software in HTC and HPC Room 406

Room 406

-

16:30

Мультипарадигменный метод разработки параллельных программ синтеза элементов микро-оптики 15m

Задачами компьютерной науки являются разработка, анализ, применение и оценка различных характеристик алгоритмов, используемых для решения специализированных задач. При этом немаловажную роль в прикладных исследованиях играет стратегия формирования модели предметной области, в рамках которой будут проводиться ассоциации между исследуемыми понятиями, объектами и программными абстракциями [1].

Особенностью представленного в работе подхода является применение мультипарадигменных абстракций с развитой семантикой для разработки программ синтеза элементов микро-оптики с возможностью параллельных вычислений. Реализованные на языке С#, модели расчета элементов микро-оптики объединяют в себе процедурную, объектно-ориентированную и обобщенную парадигмы программирования, что позволяет, с помощью параметрического полиморфизма подтипов, специфицировать требования к аргументам алгоритмов в виде базовых абстракций с дальнейшей возможностью их применения к множеству типов данных.

Алгоритм решения обратной задачи дифракции на некоторой области оптического элемента структурно можно разделить на три этапа: чтение данных (обход входных диапазонов), операция расчёта требуемой фазовой функции в конкретной точке и запись полученного значения в результирующую структуру данных или систему хранения. Использование мультипарадигменного анализа позволило сформулировать критерии общности и изменчивости [2], специфичные для каждого этапа, в терминах которых были определены варианты поведения алгоритма.

На примере нескольких видов оптических элементов (дифракционная сферическая линза, дифракционная цилиндрическая линза, радиально-симметричный аксикон, киноформный аксикон, голографический аксикон) выполнено исследование возможности создания универсального обобщенного алгоритма синтеза микрорельефа с применением мультипарадигменного подхода. Проведена проверка возможности применения распараллеливания расчёта, во время которой для каждого типа элемента использовалась параллельная версия обобщенного алгоритма [3]. Разработанная программная архитектура позволила без осложнений ввести режим распараллеливания расчёта, что обеспечило многократное ускорение при увеличении апертуры элементов без потери качества итоговых изображений.SUMMARY

Using several types of optical elements (diffractive spherical lens, diffractive cylindrical lens, radially symmetric axicon, kinoform axicon, holographic axicon) as an example, a study was conducted to determine the possibility of creating a universal generic algorithm for microrelief synthesis using a multi-paradigm approach. Testing was conducted to determine the possibility of using parallel calculation, during which a parallel version of the generic algorithm was used for each type of element [3]. The software architecture made it possible to implement a parallel computing mode without complications, with multiple acceleration when increasing the aperture of elements without loss of quality of the final images.

[1] Evans, E. Domain-Driven Design: Tackling Complexity in the Heart of Software – Addison-Wesley Longman Publishing Co., Inc., 2004.

[2] Coplien, J., Hoffman, D., Weiss, D. Commonality and variability in software engineering // IEEE Software, V.15, N.6. - 1998, pp. 37-45.

[3] Yablokova L., Lee A., Yablokov D.E. etc. Using a Parallel Approach to Calculating Micro-Optics Elements in DOERIS // 2024 10th International Conference on Information Technology and Nanotechnology, ITNT 2024. — 2024.Speaker: Denis Yablokov -

16:45

Supercomputer modeling of interaction processes of different-material metal nanoclusters 15m

The problem of supercomputer modeling of the processes of creating metallic composite materials from nanomaterial nanoclusters is considered. The general relevance of the research is related to the development of technology for manufacturing nanoscale electronic components. The relevance of a specific study is related to the need to develop both mathematical and software tools for modeling all stages of manufacturing. In this paper, we analyze the final stage of the process, when individual metal clusters interact with each other and with the substrate. These processes are studied by classical molecular dynamics (MD) methods using parallel computing. The focus of the research is to develop an approach to calculating the interaction of different-material metal systems. The problem of interaction of copper and nickel nanoclusters in the manufacture of the corresponding composite is chosen as an example. Preliminary testing confirmed the effectiveness of the developed parallel computing procedure. The work was supported by the Russian Science Foundation, project No. 25-71-20016.

Speaker: Sergey Polyakov (Keldysh Institute of Applied Mathematics) -

17:00

Web component of geometry construction for supercomputer modeling of flow around aircraft 15m

The needs of civil aviation and space research require a comprehensive study of the processes of flow around aircraft, including supersonic flow. In this area, along with empirical experimental studies, mathematical modeling methods are widely used. The classical structure of a computational experiment includes the stage of preparing the initial data, launching the computational application, and analyzing the results obtained. Often, such calculations require the use of supercomputer-level resources. Today, digital platforms are used to simplify the computational experiment. These systems allow performing the entire computational cycle through a unified graphical user interface available on the Internet. The general architecture and core of such a platform were developed by the group of authors earlier. The report proposes to consider one of the directions of the system development. It is related to the construction of the geometry of the streamlined object. The main feature in this case is the implementation of the interface component that allows the preparation of a 2D/3D computational domain and its marking using a web browser. The obtained geometric description allows us to conduct the computational experiment of supersonic flow around the composite object.

The work was supported by the Russian Science Foundation (project No. 25-11-00099, https://rscf.ru/project/25-11-00099/).

Speaker: Nikita Tarasov (KIAM RAS) -

17:15

Parallelization in the modeling of dynamics of a polaron in a constant electric field along molecular chain in Langevin thermostat 15m

Charge transfer processes in biopolymers such as DNA are actively investigated using mathematical and computer modeling. A large number of works are devoted to studies of polaron mechanism of charge transfer. We have modeled the dynamics of Holstain polaron in a chain with small random perturbations and under the influence of a constant electric field.

In the semi-classical Holstein model the region of existence of polarons in the thermodynamic equilibrium state depends not only on the temperature but also on the chain length. Therefore when we compute the dynamics from the initial polaron data, the mean displacement of the charge mass center differs for different-length chains at the same temperature. For a large radius polaron, it is shown numerically that the “mean polaron displacement” (which takes account only of the polaron peak and its position) behaves similarly for different-length chains during the time when the polaron persists. A similar slope of the polaron displacement enables one to find the polaron mean velocity and, by analogy with the charge mobility, assess the “polaron mobility”.

For a temperature prescribed, we compute a set of realizations (dynamics of the system from different initial data and with different pseudo-random time-series) and then calculate trajectories averaged over realizations. This formulation allows for trivial parallelization (using MPI) “one realization – one node” with an efficiency of almost 100%.

To reduce the calculation time, parallelization was performed on a node containing multi-core processors when calculating a realization using shared memory and openMP. The dynamic equations for the n-th site of the chain explicitly include only its closest neighbors. Therefore, the chain is divided into short parts that are integrated at the step independently on different cores of the node, while only for the boundary sites the data calculated by other processes are required. All processes must go to the next iteration synchronously; the synchronization operation also takes time. The longer the chain, the smaller the ratio of exchanged data to calculated data, and the greater the gain from parallelization.

Test studies of the gain t1/tp were made (t1 denotes the execution time of the sequential variant, tp denotes the execution time of the task parallelized using openMP on p threads). Maximum t1/tp ~ 0.9 p allows to significantly reduce the machine time of a realization.

The calculations were performed on the supercomputers k-60 and k-100 installed in the Suреrсоmрutеr Сеntrе of Collective Usage of KIAM RASSpeaker: Nadezhda Fialko (Institute of Mathematical Problems of Biology RAS - the Branch of Keldysh Institute of Applied Mathematics of Russian Academy of Sciences (IMPB RAS- Branch of KIAM RAS)) -

17:30

Методы прогнозирования времени выполнения задач в гетерогенной сети вычислителей 15m

В работе исследуется применимость методов машинного обучения для

прогнозирования времени выполнения задач на узлах гетерогенной высокопроиз-

водительной вычислительной сети. Актуальность проблемы обусловлена необхо-

димостью эффективного использования вычислительных ресурсов и оптималь-

ного планирования задач. В качестве входных данных используется информация

о запусках задач, где известны лишь некоторые параметры программ и использу-

емые ресурсы.Speaker: Виктор Писковский (ВМК МГУ) -

17:45

Праллельная матричная модификация метода муравьиных колоний для решения параметрических задач на гетерогенных вычислителях. 15m

Развитие современных вычислительных систем направлено на увеличение количества вычислителей и ускорителей, в том числе и разнородных: матричных ускорителей, MIMD, SIMD и др., что требует модернизации вычислительных алгоритмов с учетом структуры компьютера. В докладе рассматривается матричная модификация метода муравьиных колоний (ACO) для решения дискретной параметрической задачи, поиска дискретных значений параметров, обеспечивающих оптимальные значения критериев. Значения критериев при этом определяются в результате работы аналитических или имитационных моделей также запускаемых на вычислителе. Для решения параметрической задачи в методе ACO применяется графовая структура данных, в которой для каждого значения каждого параметра выделяется одна вершина, а муравей-агент должен выбрать по одному значению для каждого параметра. Данная структура позволяет рассматривать работу ACO в виде матричных операций и эффективно применять матричные, SIMT и SIMD ускорители. Предложенная матричная модификация, работающая с оптимизированной графовой структурой, позволяет достичь ускорения от 13 до 22 раз по сравнению с оригинальным методом при выполнении на CPU. Во многом такое ускорение обусловлено работой современного оптимизирующего компилятора C++. Предложен алгоритм представления матричного ACO для SIMD ускорителя и гетерогенного вычислителя. Проведены исследования на ускорителе с применением технологии NVIDIA CUDA, ускорение составило от 7 до 20 раз. Применение технологий AVX и OMP позволило обеспечить ускорение до 35 раз, по сравнению с классической реализацией ACO. Для применения ACO на GPU с применением технологии CUDA требуется пересмотр алгоритма, разделение данных по типам памяти, правильное разделение на потоки и блоки, решения проблем синхронизации и передачи данных между CPU и GPU. Предложен алгоритм для гетерогенного вычислителя, выполняющего матричные преобразования на CPU и вычисление пути муравья-агента на GPU, который показал ускорение от 30 до 70 раз. Исследования проводились на различных GPU персональных компьютеров и на высокопроизводительном кластере РЭУ. Проведено теоретическое исследование эффективности применения гетерогенного матричного ACO на гетерогенном вычислителе, состоящем из наборов MIMD ядер и SIMD ускорителей. Предложен подход к определению предела теоретического ускорения алгоритма и оптимальная структура гетерогенного реконфигурируемого вычислителя. Предложены рекомендации по выбору эффективного параллельного алгоритма ACO с учетом времени и принципов взаимодействия, топологии вычислителя и времени выполнения вычислений в модели в процессе определения значения целевой функции.

Speaker: Yurii Titov (Moscow Aviation Institute (national research university)) -

18:00

Адаптация параллельных реализаций солвера глобальной и дискретной оптимизации SCIP к AMPL-форматам для входных и выходных данных 15m

Солвер глобальной и дискретной оптимизации SCIP [1], scipopt.org, развивается с 2005 года и предназначен для решения задач математического программирования, в т.ч. с дискретными переменными, методом ветвей-и-границ-и-отсечений (branch-and-bound-and-cut). С конца 2022 года он свободно доступен в исходных кодах по лицензии Apache 2.0. Хотя по производительности SCIP уступает коммерческим солверам (Gurobi, COPT, CPLEX), он очень полезен в поисковых исследованиях поскольку применим для более широкого класса нелинейных задач, по сравнению с Gurobi и COPT. Типовой сценарий работы с солвером SCIP: подготовка формальной модели задачи и исходных данных для оправки их солверу, получение решения и анализ результатов. Как показала практика, одним из удобных вариантов является использование файлов в форматах стандарта AMPL, ampl.com: NL-файлов для исходной задачи и SOL-файлов для полученного решения. Для генерации NL-файлов и чтения результатов из SOL-файлов удобно применять свободно доступный пакет Pyomo, pyomo.org.

Производительность SCIP может быть существенно повышена за счет работы в параллельном режиме через библиотеку UG (ubiquity generator), ug.zib.de. Разработчики предлагают две параллельные реализации [2, 3]: многопоточный FiberSCIP, для многопроцессорных систем с общей памятью; ParaSCIP, для кластеров с коммуникацией по технологией MPI. К сожалению, эти параллельные солверы не имеют встроенной поддержки AMPL-форматов, что затрудняет их широкое применение.

В докладе описывается простой способ адаптации этих параллельных солверов к AMPL-форматам, где в роли «транслятора» форматов входных и выходных данных используется «обычный» солвер SCIP и несложный Bash-скрипт. В частности, это позволяет применять FiberSCIP и ParaSCIP непосредственно из Python-приложений на базе Pyomo или в сервисах оптимизационного моделирования на основе Everest, optmod.distcomp.org.

Работа выполнена в рамках государственного задания ИППИ РАН, утвержденного Минобрнауки России.

Ссылки

-

K. Bestuzheva, A. Gleixner, T. Koch, M.E. Pfetsch, Y. Shinano, S. Vigerske et al. The SCIP Optimization Suite 9.0 // Optimization Online, 2024, 36 pp. https://optimization-online.org/?p=25734

-

Yuji Shinano. ParaSCIP and FiberSCIP libraries to parallelize a customized SCIP solver. SCIP Workshop 2014, 30.09-2.10, 2014, Zuse Institute Berlin, https://www.scipopt.org/workshop2014/parascip_libraries.pdf

-

Y. Shinano et al. FiberSCIP - A Shared Memory Parallelization of SCIP // INFORMS Journal on Computing, 2018, 30(1), P. 11-30. https://doi.org/10.1287/ijoc.2017.0762

Speaker: Sergey Smirnov (Institute for Information Transmission Problems of the Russian Academy of Sciences) -

-

18:15

Использование MPI for Python для организации очереди выполнения при сканировании поверхностей потенциальной энергии 15m

Параллелизм на уровне процессов является неотъемлемой частью расчетов на крупных вычислительных кластерах. Примером может служить моделирование процесса диффузии отдельных атомов по поверхности твердой фазы. Инструменты для полного сканирования поверхности потенциальной энергии дают исчерпывающую информацию об энергетических барьерах и путях диффузии, но вследствие больших вычислительных затрат редко входят в состав квантово-химических пакетов.

В данной работе предлагается решение этой задачи на примере моделирования диффузии атома водорода по поверхности меди в рамках исследований в области водородной энергетики. Алгоритм сканирования поверхности потенциальной энергии выполнен в виде Python-скрипта, который обеспечивает запуск, распараллеливание и контроль вычислений квантово-химических кодов. Расчеты формируются из пула точек, являющихся узлами решетки сканирования, и представляют собой индивидуальные задачи, связанные через общий набор файлов, что исключает накладные расходы на интенсивный обмен данными между процессами, позволяя минимизировать потери производительности и упростить постановку задачи в очередь выполнения высокопроизводительного кластера.

Работа является продолжением цикла исследований, проводимых в Суперкомпьютерном центре Воронежского госуниверситета, представленного ранее на GRID’2023

Speaker: Александр Романов

-

16:30

-

16:30

→

18:30

Computing for MegaScience Projects Room 420

Room 420

-

16:30

Building, testing and deployment method of SPD application software 15m

This work focuses on the development of a method for automating the processes of building, testing, and deploying application software for the distributed data processing system of the SPD experiment at the NICA collider. The study involves a systematic analysis of the existing development process, identifying key issues such as the high labor intensity of manual operations and the lack of a unified methodology.

Based on the analysis of modern practices and technologies, a comprehensive method has been developed, incorporating GitFlow, CI/CD tools, and containerization using Docker and Apptainer. The methodology automates the creation of virtual environments, building and testing software modules, as well as the publication and deployment of images to container registries.

The practical implementation of the methodology has been deployed in several projects within the SPD collaboration, leading to a reduction in preparation time for releasing new software versions, and improving the quality and reproducibility of software products. This work demonstrates a substantial improvement in development processes for scientific collaborations and can be adapted for other large-scale projects.Speaker: Ринат Короткин -

16:45

Design of the Data Quality Monitoring system for the BM@N experiment 15m

The report presents the design of the Data Quality Monitoring (DQM) system for the BM@N experiment of the NICA project, including a description of the system's objectives, a brief overview of such systems that operate in the CERN LHC experiments, and general approaches to creating the systems. The features of the BM@N experiment are analyzed, such as the rate and volume of data received during the setup operation, and other parameters that must be taken into account when designing the BM@N DQM system. The system architecture, object model, and database scheme are presented, and the structure of configuration files that will be used for fine-tuning the work of the system is described. The future user interface of the Data Quality Monitoring is also discussed.

Speaker: Dr Игорь Александров (JINR) -

17:00

Automation of BM@N Run9 data processing on a DIRAC distributed infrastructure 15m

In 2025, the 9th data-taking run is scheduled for the BM@N experiment. Since February 2023, when data from the 8th run were acquired, the BM@N data processing has been carried out using a geographically distributed heterogeneous infrastructure based on the DIRAC Interware software. For the 9th run, an automated task-launching methodology has been developed. The processing is triggered by the appearance of RAW-type files associated with the 9th run in the DIRAC file catalog. A dedicated service periodically checks the catalog for new files requiring processing and initiates the corresponding tasks. Since BM@N data processing occurs in two stages (first, RAW → DIGI format conversion, followed by DIGI → DST conversion), two task triggers must be defined: one for the arrival of RAW files and another for DIGI files. Automating the processing pipeline enables rapid feedback on the experimental data quality, allowing for timely Data Quality monitoring and issue resolution.

Speaker: Igor Pelevanyuk (Joint Institute for Nuclear Research) -

17:15

Experience of operation the organized grid data analysis using Hyperloop train system 15m

Operational experience with the Hyperloop train system is presented. This framework facilitates organized grid data analysis in ALICE at the Large Hadron Collider (LHC). Operational since LHC Run 3, the system enables efficient management of distributed computing resources via a web-based interface, optimizing workflow execution and resource utilization. Hyperloop structures analyses as modular trains composed of interconnected wagons – configurable workflows handling both user-defined tasks and expert-level services. Key features encompass automated resource estimation, testing, submission, alongside tools for version control and dataset comparison.

Based on multiple years of work as a Hyperloop operator, the role of such framework in running the organized grid-based analysis for large collaborations is highlighted, and its adaptability to other mega-science projects is discussed. Solutions for stability, validation, and user accessibility are highlighted. Furthermore, the application of this operational experience to the development of analysis train systems for the MPD experiment at the NICA collider is discussed.Comment:

Hyperloop is a framework for the grid data analysis in ALICE. It is run 24/7 and operated by four groups by set by geographical considerations. In 2022-2024 I was chairing the team of operators from St. Petersburg State University. Member of ALICE Collaboration since 2011. In 2014-2015 Coordinator of mass Monte Carlo production in ALICE. 2021-2022 expert of PWG-CF working group of ALICE for Monte Carlo modelling. Since 2024 - responsible for grid infrastructure for LHC/NICA in St. Petersburg State University.

Speaker: Vladimir Kovalenko (Saint Petersburg State University) -

17:30

Modern Web Technologies in Event Display Creation for High-Energy Physics 15m